What are the Philosophical Foundations of Cybernetics?

Cybernetics, at its core, is a fascinating blend of **philosophy**, **science**, and **technology** that examines the intricate relationships between systems, communication, and control. The philosophical foundations of cybernetics delve into how we understand not just machines, but also living organisms as systems that process information and respond to their environments. This field invites us to ponder profound questions: How do we define intelligence? What does it mean to be adaptive? And how do feedback loops shape our reality?

The roots of cybernetics can be traced back to the early 20th century, where thinkers like Norbert Wiener began to explore the connections between **communication** and **control** in both biological and artificial systems. It’s almost like a dance between the two, where each partner—be it a human, an animal, or a machine—responds to the movements of the other. This interplay forms the essence of cybernetic theory, which posits that systems are not merely static entities but dynamic processes that evolve over time.

One of the key philosophical questions that arise in this context is: **What is the nature of information?** In cybernetics, information is not just data; it is a vital currency that enables systems to make decisions and adapt to changes. Just as a river flows and changes shape with the landscape, information flows through systems, shaping their behavior and structure. This perspective challenges traditional notions of separation between the observer and the observed, suggesting a more integrated view of existence where everything is interconnected.

Moreover, the implications of cybernetics extend beyond mere theoretical musings. They touch on practical applications in various fields—from biology to engineering to artificial intelligence. For instance, understanding feedback mechanisms can lead to better designs in technology, while insights into self-organization can inspire new approaches in ecological conservation. The philosophical foundations of cybernetics thus serve as a bridge between abstract thought and tangible action, prompting us to consider how we can harness these principles to create a more sustainable and intelligent future.

As we explore further, we find that the philosophical underpinnings of cybernetics challenge us to rethink our place in the universe. Are we merely passive observers of systems around us, or do we actively shape them through our interactions? This question resonates deeply in today’s world, where technology is ever-present and increasingly sophisticated. The philosophical foundations of cybernetics encourage us to reflect on our role as **agents of change**, emphasizing the importance of understanding the systems we inhabit and influence.

- What is the main focus of cybernetics? Cybernetics primarily focuses on the study of systems, communication, and control across various disciplines, emphasizing the interconnections between biological and artificial entities.

- Who are the key figures in the development of cybernetics? Norbert Wiener is often regarded as the father of cybernetics, but many other thinkers have contributed to its evolution, including Ross Ashby and Heinz von Foerster.

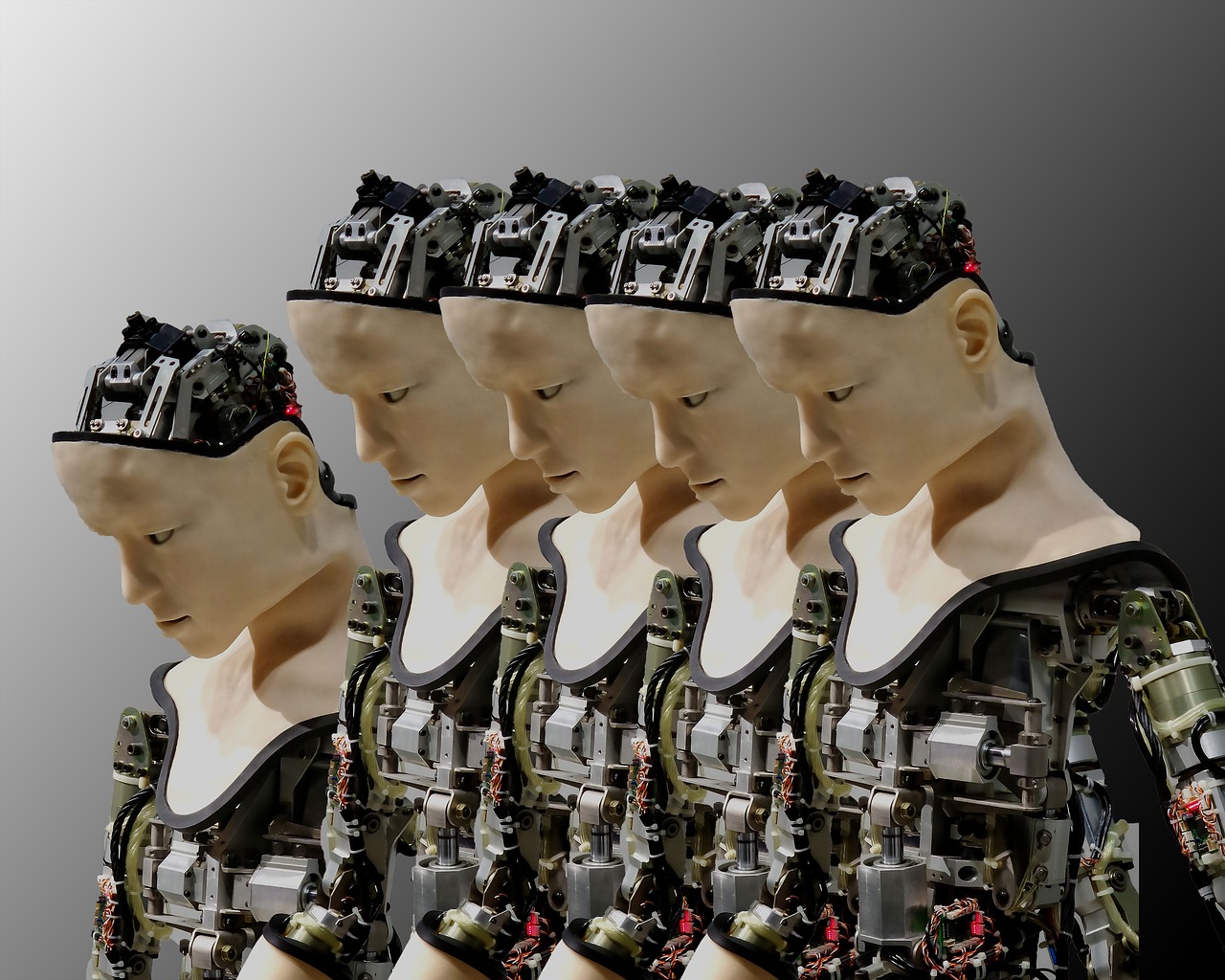

- How does cybernetics relate to artificial intelligence? Cybernetics provides foundational principles that inform the design and functioning of artificial intelligence systems, particularly in areas like adaptive learning and feedback mechanisms.

- What ethical considerations arise from cybernetics? Ethical dilemmas include issues of autonomy, decision-making, and the societal impacts of increasingly autonomous systems, prompting discussions on responsible AI development.

The Origins of Cybernetics

Cybernetics, a term coined by the brilliant mathematician and philosopher Norbert Wiener in the mid-20th century, has roots that stretch deep into the soil of various disciplines, including mathematics, engineering, biology, and even philosophy. But what exactly sparked this interdisciplinary revolution? To understand the origins of cybernetics, one must dive into the historical context of the time, where the convergence of technology and biological sciences began to reshape our understanding of systems and control.

Wiener, often regarded as the father of cybernetics, was influenced by the rapid advancements in technology during World War II. The development of radar and the need for effective communication systems led to a growing interest in understanding how systems could be designed to manage information and control processes. This era was marked by a fascination with feedback mechanisms—how systems could self-regulate and adapt to changing conditions. Wiener’s work was not just a response to technological advancements; it was a philosophical inquiry into the nature of communication and control.

In his seminal book, “Cybernetics: Or Control and Communication in the Animal and the Machine”, published in 1948, Wiener laid the groundwork for understanding complex systems. He emphasized the parallels between biological organisms and machines, proposing that both operate on similar principles of feedback and control. This was a groundbreaking idea that shifted how we perceive interactions within systems, whether they are natural or artificial.

Wiener's ideas were further bolstered by the contributions of other intellectual giants. For instance, Ross Ashby introduced the concept of the “homeostat,” a system capable of maintaining stability through feedback loops. Ashby’s work illustrated that systems could achieve a state of equilibrium, adapting to external changes without losing their essential characteristics. This idea opened the door to a myriad of applications in various fields, from biology to engineering, and even social sciences.

Moreover, the influence of information theory, developed by Claude Shannon, cannot be overlooked. Shannon’s theories on communication and information flow provided a mathematical framework that complemented Wiener’s philosophical insights. Together, these pioneers established a foundation for understanding how information is processed and utilized within systems, paving the way for future innovations in technology and artificial intelligence.

In summary, the origins of cybernetics are deeply intertwined with the historical, technological, and philosophical currents of the 20th century. It emerged as a response to the complexities of communication and control in both biological and mechanical systems. As we delve deeper into the principles of cybernetics, it becomes clear that its interdisciplinary nature is not just a product of its time, but a lens through which we can understand the intricate dance of systems in our world today.

Key Concepts in Cybernetics

Cybernetics is a fascinating field that dives deep into the intricate dance of systems, communication, and control. At its core, it revolves around several key concepts that help us understand how systems—both biological and artificial—interact with their environments. Think of these concepts as the building blocks of cybernetic theory, each playing a pivotal role in how we perceive and design systems. Among these concepts, feedback loops, homeostasis, and self-organization stand out as essential principles that govern behavior and adaptation.

To truly grasp the essence of cybernetics, it is crucial to explore these concepts in detail. Feedback loops, for instance, can be likened to a conversation between a system and its environment. Just as you adjust your tone or words based on the reactions of your listener, systems respond to outputs by modifying their behaviors. This dynamic interaction can be categorized into two primary types: positive feedback and negative feedback. Each type has its unique implications and applications, shaping the stability and evolution of systems.

Feedback mechanisms are the lifeblood of cybernetic systems. They enable a system to adapt and evolve by continuously monitoring its outputs and adjusting accordingly. Imagine a thermostat in your home: it senses the temperature and either heats or cools the environment to maintain your desired comfort level. This is a classic example of how feedback loops operate in practice. Feedback mechanisms can be classified into two categories:

- Positive Feedback: This type of feedback amplifies changes, leading to growth or, in some cases, collapse. For example, in an ecosystem, a small increase in a population can lead to more resources, further increasing that population until it potentially exceeds the carrying capacity of the environment.

- Negative Feedback: In contrast, negative feedback stabilizes a system by counteracting deviations from a set point. This is crucial for maintaining homeostasis in biological organisms, such as regulating body temperature or blood sugar levels.

Understanding these feedback mechanisms is essential for grasping how systems function and adapt. They not only apply to natural systems but also to engineered systems, such as control systems in technology and robotics, where maintaining balance and performance is key.

Another cornerstone of cybernetics is the concept of self-organization. This refers to the ability of systems to spontaneously develop complex structures and patterns without external guidance. Think of a flock of birds flying in perfect formation or the way ant colonies organize themselves to find food. These phenomena arise from simple local interactions between individual components, leading to emergent behavior that is greater than the sum of its parts.

Self-organization challenges traditional views of control and hierarchy, suggesting that order can emerge from chaos. This principle has profound implications not only in nature but also in technology, where understanding self-organization can lead to innovations in areas like artificial intelligence and adaptive systems. The beauty of self-organization lies in its potential to create resilient and adaptive systems that can thrive in changing environments.

In summary, the key concepts of cybernetics—feedback loops, homeostasis, and self-organization—serve as essential lenses through which we can analyze and design complex systems. By understanding these principles, we can better navigate the interplay between systems, communication, and control, paving the way for advancements in both biological and artificial contexts.

What is cybernetics?

Cybernetics is an interdisciplinary field that studies the structure, communication, and control in complex systems, both biological and artificial. It focuses on how systems self-regulate and adapt to changes in their environments.

What are feedback loops?

Feedback loops are processes in which the outputs of a system are fed back into it, influencing future outputs. They can be positive (amplifying changes) or negative (stabilizing the system).

How does self-organization work?

Self-organization refers to the spontaneous emergence of order and complex structures in systems without external direction. It occurs through local interactions among components, leading to collective behavior.

Why are these concepts important?

Understanding these concepts is crucial for analyzing and designing systems in various fields, including biology, engineering, and artificial intelligence. They provide insights into how systems can adapt, learn, and maintain stability.

Feedback Mechanisms

Feedback mechanisms are the backbone of cybernetics, acting like the pulse of a living organism, constantly adjusting and recalibrating based on the information they receive. Imagine driving a car: when you turn the steering wheel, you expect the car to respond accordingly. This is a simple analogy of how feedback works in systems. In cybernetics, feedback loops allow systems—whether biological, mechanical, or social—to modify their actions based on the outcomes of their previous actions. This dynamic interaction is crucial for maintaining balance and enabling adaptation in the face of changing conditions.

There are two primary types of feedback mechanisms: positive feedback and negative feedback. Each serves a unique purpose and influences the behavior of systems in distinct ways. Positive feedback is like a snowball rolling down a hill, gaining momentum as it goes. It amplifies changes and can lead to rapid growth or, in some cases, catastrophic failure. On the other hand, negative feedback acts as a stabilizing force, akin to a thermostat that regulates temperature by turning the heating system on or off. This balance is essential for the overall health and functionality of systems.

To illustrate these concepts further, let’s take a look at how feedback mechanisms play out in various contexts:

| Type of Feedback | Description | Examples |

|---|---|---|

| Positive Feedback | Amplifies changes, leading to growth or collapse. |

|

| Negative Feedback | Stabilizes systems by counteracting deviations. |

|

Understanding feedback mechanisms is not just an academic exercise; it has real-world implications. For instance, in the realm of artificial intelligence, algorithms often rely on feedback to learn from their mistakes and improve their performance over time. Just like a child learns to walk by adjusting their balance based on previous attempts, machines utilize feedback to refine their processes. This adaptive learning is what makes AI systems increasingly capable and efficient.

In conclusion, feedback mechanisms are fundamental to the operation of systems across various domains. They enable adaptability, stability, and growth, shaping the way we understand both natural and artificial systems. By grasping these concepts, we can better appreciate the intricate dance of interactions that govern our world.

- What is the difference between positive and negative feedback?

Positive feedback amplifies changes, leading to growth or collapse, while negative feedback stabilizes systems by counteracting deviations from a set point. - How do feedback mechanisms apply to artificial intelligence?

AI systems use feedback to learn from their actions, allowing them to improve performance over time, similar to how humans learn from experience. - Can feedback mechanisms be found in nature?

Absolutely! Feedback mechanisms are prevalent in natural systems, such as ecosystems and biological processes, where they help maintain balance and adapt to changes.

Positive Feedback

Positive feedback is a fascinating concept in the realm of cybernetics, and it plays a pivotal role in understanding how systems can evolve and change over time. Imagine a snowball rolling down a hill; as it gathers more snow, it grows larger and accelerates, creating a cycle of growth that can lead to surprising outcomes. This is essentially what positive feedback does within a system—it amplifies changes, leading to exponential growth or, in some cases, catastrophic collapse.

To illustrate this concept further, let’s consider a few examples from both natural and artificial systems:

- Ecosystems: In nature, positive feedback can be seen in the melting of polar ice caps. As ice melts, it exposes darker ocean water, which absorbs more sunlight and accelerates warming, leading to even more ice melting. This cycle can spiral out of control, illustrating the potential dangers of unchecked positive feedback.

- Technological Systems: In technology, a classic example is the stock market. When stock prices rise, investor confidence increases, leading more people to buy stocks, which drives prices even higher. However, this can also lead to bubbles, where prices inflate to unsustainable levels before crashing.

What’s particularly intriguing about positive feedback is its dual nature. While it can drive innovation and growth, it can also lead to instability if not managed properly. This duality is a critical consideration for anyone involved in system design, whether in ecology, economics, or engineering. Understanding when and how to harness positive feedback can mean the difference between success and failure.

In summary, positive feedback can be a double-edged sword. It can lead to remarkable growth and advancements, but it also requires careful monitoring to prevent potential disasters. As we dive deeper into the interplay of systems and feedback mechanisms, we begin to appreciate the complexity and beauty of cybernetics, where every action has the potential to create ripples far beyond our immediate perception.

- What is positive feedback in cybernetics? Positive feedback refers to a process where the output of a system amplifies the initial input, leading to exponential growth or change.

- Can positive feedback be beneficial? Yes, it can drive innovation and growth in various systems, but it also needs to be monitored to prevent instability.

- How does positive feedback differ from negative feedback? While positive feedback amplifies changes, negative feedback works to stabilize a system by counteracting deviations from a set point.

Negative Feedback

Negative feedback is an essential concept in cybernetics that plays a pivotal role in maintaining stability within various systems. Imagine a thermostat in your home: it senses the temperature and adjusts the heating or cooling to keep the environment comfortable. This dynamic is a classic example of negative feedback at work. When the temperature rises above a certain point, the thermostat kicks in to cool the space down, and when it falls below that point, it activates the heater. This constant adjustment keeps the system in a state of equilibrium.

In biological systems, negative feedback is crucial for survival. Take the human body, for instance. Our body temperature is regulated through negative feedback mechanisms. If our temperature rises due to external heat or fever, our body initiates processes like sweating to cool down. Conversely, if we get too cold, we shiver to generate heat. This self-regulating behavior is vital for homeostasis, ensuring that our internal environment remains stable despite external fluctuations.

In engineering and technology, negative feedback is equally important. For example, in automated control systems, engineers design feedback loops to ensure that machines operate within desired parameters. This can be seen in various applications, from cruise control in cars to industrial processes where precision is key. By continuously monitoring outputs and making adjustments, these systems can prevent errors and improve efficiency.

To illustrate the concept further, consider the following table that summarizes the key characteristics of negative feedback:

| Characteristic | Description |

|---|---|

| Stability | Helps maintain equilibrium in systems |

| Response | Adjusts behavior based on deviations from a set point |

| Applications | Used in biological, mechanical, and electronic systems |

However, while negative feedback is generally stabilizing, it can also lead to unintended consequences if not properly managed. For instance, in ecological systems, excessive negative feedback can stifle diversity and resilience, making environments more susceptible to collapse under stress. Similarly, in technology, rigid feedback systems may hinder innovation and adaptability. Thus, understanding the balance between feedback mechanisms is crucial for both natural and artificial systems.

In summary, negative feedback is a fundamental principle that helps systems maintain stability and adapt to changes. Whether in nature or technology, its significance cannot be overstated. As we delve deeper into the realms of cybernetics and artificial intelligence, recognizing the role of negative feedback will be key to developing more resilient and adaptive systems.

- What is negative feedback? Negative feedback is a process where a system self-regulates by counteracting deviations from a desired state, ensuring stability.

- Can you give an example of negative feedback in nature? Yes, human body temperature regulation is a prime example, where processes like sweating and shivering maintain a stable internal environment.

- How is negative feedback used in technology? In technology, negative feedback is implemented in control systems to ensure machines operate within set parameters, enhancing efficiency and accuracy.

Self-Organization

Self-organization is one of the most fascinating concepts in cybernetics, embodying the idea that systems can spontaneously create order and complexity without any external direction. Imagine a flock of birds flying in perfect formation or the way snowflakes form intricate patterns as they fall—these are perfect examples of self-organization in nature. This phenomenon occurs across various scales and systems, from the microscopic to the cosmic, illustrating the inherent ability of systems to adapt and evolve in response to their environment.

At its core, self-organization challenges the traditional notion of control and hierarchy. Instead of being directed by a central authority, self-organizing systems rely on local interactions among their components. These interactions can lead to emergent behaviors that are greater than the sum of their parts. For instance, in a bustling city, no single person orchestrates the flow of traffic; instead, the collective behavior of drivers, pedestrians, and traffic signals creates a dynamic system that adapts to changing conditions.

One of the key features of self-organization is the role of feedback loops. In these systems, feedback can either reinforce or dampen changes, allowing the system to maintain stability or shift to a new equilibrium. For example, in ecosystems, predator-prey dynamics exemplify this concept. When a predator population increases, the prey population may decrease, which in turn causes the predator population to decline due to a lack of food, illustrating a complex interplay of feedback that maintains balance.

Moreover, self-organization is not limited to biological systems; it also plays a crucial role in technology and artificial systems. For instance, in the realm of robotics, swarm intelligence—where multiple robots work together to achieve a common goal—demonstrates self-organization. These robots operate based on simple rules and local information, leading to sophisticated group behaviors without a central command. This principle has vast implications for fields such as logistics, environmental monitoring, and even search and rescue operations.

To further illustrate the concept of self-organization, consider the following table that highlights different examples across various domains:

| Domain | Example of Self-Organization | Description |

|---|---|---|

| Biology | Ant Colonies | Ants communicate through pheromones, creating complex foraging patterns without centralized control. |

| Physics | Phase Transitions | When water freezes, molecules self-organize into a crystalline structure without external influence. |

| Technology | Decentralized Networks | Peer-to-peer networks allow users to share resources without a central server, leading to efficient data distribution. |

In conclusion, self-organization is a powerful concept that reshapes our understanding of how systems operate, whether they are natural or artificial. It emphasizes the importance of local interactions, feedback mechanisms, and the potential for complex behaviors to emerge from simple rules. As we delve deeper into the implications of self-organization, we begin to appreciate the intricate dance of order and chaos that defines both our world and the systems we create.

- What is self-organization? Self-organization refers to the process by which a system spontaneously develops order and complexity without external guidance.

- How does self-organization work in nature? In nature, self-organization occurs through local interactions among components, leading to emergent behaviors, such as flocking in birds or the formation of snowflakes.

- Can self-organization be observed in technology? Yes, self-organization can be seen in technology, particularly in decentralized systems like peer-to-peer networks and swarm robotics.

- What role do feedback loops play in self-organization? Feedback loops help regulate the behavior of a system, allowing it to maintain stability or shift to new states based on internal and external changes.

Implications for Artificial Intelligence

As we dive into the realm of artificial intelligence (AI), it becomes increasingly clear that the principles of cybernetics are not just relevant but foundational. Cybernetics provides a framework for understanding how systems—whether biological or artificial—learn, adapt, and communicate. This relationship is crucial as we design machines that not only perform tasks but also evolve their capabilities over time. Imagine teaching a child to ride a bike; they learn from their mistakes, adjust their balance, and gradually improve. Similarly, AI systems utilize feedback mechanisms from cybernetics to refine their operations based on performance data.

The implications of this relationship are profound. For instance, when we consider the way AI systems process information, we see a direct correlation with cybernetic feedback loops. These loops allow machines to analyze the results of their actions, adjust their strategies, and ultimately enhance their performance. In this context, cybernetics serves as a guiding light, illuminating the path for developing smarter, more responsive AI technologies.

One of the most exciting areas where cybernetics intersects with AI is in the realm of adaptive learning systems. These systems are designed to improve over time, much like an athlete honing their skills. They rely on cybernetic principles to analyze their environment, learn from interactions, and adapt to new challenges. This adaptability is crucial in fields such as robotics, where machines must navigate dynamic environments, and data analysis, where algorithms must continuously refine their predictions based on incoming information.

However, as we embrace the potential of AI informed by cybernetic principles, we must also confront a host of ethical considerations. The more autonomous these systems become, the more questions arise regarding their decision-making processes and the implications of their actions. For example, if an AI system makes a decision that leads to unintended consequences, who is responsible? This dilemma echoes the age-old question of accountability in complex systems. As we advance, it’s essential to establish ethical guidelines that govern the development and deployment of these technologies.

To better understand these implications, let’s take a look at a table summarizing key areas where cybernetics influences AI:

| Area of Influence | Description |

|---|---|

| Feedback Mechanisms | AI systems utilize feedback loops to learn from their actions and improve performance. |

| Adaptive Learning | Systems that adjust their algorithms based on new data, enhancing their capabilities over time. |

| Ethical Considerations | The need for guidelines on accountability and responsibility in AI decision-making processes. |

In conclusion, the interplay between cybernetics and artificial intelligence offers a wealth of opportunities and challenges. As we harness these principles to create more intelligent systems, we must also remain vigilant about the ethical implications of our innovations. The journey ahead is not just about building smarter machines; it’s about ensuring that these machines operate within a framework that respects human values and societal norms.

- What is cybernetics? Cybernetics is the interdisciplinary study of systems, control, and communication in animals and machines.

- How does cybernetics relate to AI? Cybernetics provides the foundational principles that guide the development of adaptive and intelligent systems in AI.

- What are feedback loops? Feedback loops are processes where the output of a system is fed back into it, allowing for adjustments and improvements.

- What ethical concerns arise with AI? Ethical concerns include accountability, decision-making transparency, and the potential for unintended consequences.

Adaptive Learning Systems

Adaptive learning systems are a fascinating intersection of cybernetics and artificial intelligence, where machines learn and evolve based on their interactions with the environment. Imagine a child learning to ride a bicycle: at first, they wobble and struggle to maintain balance, but with practice and feedback from their experiences, they gradually improve. Similarly, adaptive learning systems utilize feedback to enhance their performance over time. This dynamic process enables them to adjust their strategies and behaviors in response to changing conditions, which is vital for their success in various applications.

At the core of these systems is the principle of feedback loops, which allow machines to assess their performance and make necessary adjustments. For instance, in a robotic arm used for assembly, if the arm misaligns a component, the system can detect this deviation through sensors and recalibrate its movements accordingly. This ability to learn from past mistakes is what sets adaptive learning systems apart from traditional programming methods, where instructions are static and do not evolve.

One of the most exciting applications of adaptive learning systems is in the field of education. Imagine a virtual tutor that tailors lessons to each student's unique learning style and pace. By analyzing a student's progress, the system can identify areas where they struggle and adjust the curriculum to provide additional support. This personalized approach not only enhances learning outcomes but also keeps students engaged and motivated.

Furthermore, adaptive learning systems are making waves in industries like healthcare and finance. In healthcare, for example, these systems can analyze patient data to predict health risks and suggest personalized treatment plans. In finance, they can adapt to market trends, optimizing investment strategies in real time. The potential applications are vast, and as technology advances, the capabilities of these systems will only continue to grow.

However, with great power comes great responsibility. As we integrate adaptive learning systems into our daily lives, we must also consider the ethical implications. Questions arise about autonomy and decision-making. Who is responsible when an adaptive system makes a mistake? How do we ensure that these systems operate fairly and without bias? Addressing these questions is crucial as we navigate the future of technology.

In summary, adaptive learning systems represent a revolutionary approach to machine learning, enabling systems to grow and adapt much like living organisms. As we continue to explore their potential, the key will be balancing innovation with ethical considerations to ensure these systems benefit society as a whole.

- What are adaptive learning systems?

Adaptive learning systems are technologies that adjust their operations based on feedback from their environment, enabling them to improve performance over time. - How do feedback loops work in adaptive learning systems?

Feedback loops allow these systems to compare their current performance against desired outcomes, facilitating adjustments to improve accuracy and efficiency. - What are some applications of adaptive learning systems?

They are used in various fields, including education, healthcare, and finance, to personalize experiences and optimize processes. - What ethical considerations are associated with adaptive learning systems?

Concerns include autonomy, accountability, and the potential for bias in decision-making processes.

Ethical Considerations

As we delve deeper into the realms of cybernetics and artificial intelligence, we inevitably stumble upon a tangled web of that demand our attention. With machines becoming increasingly autonomous, we face profound questions about the nature of decision-making and the responsibilities that come with it. For instance, if an AI system makes a decision that leads to unintended consequences, who is held accountable? Is it the creator, the user, or the machine itself? These questions are not merely academic; they resonate deeply in our rapidly evolving technological landscape.

One of the most pressing ethical dilemmas involves the concept of autonomy. As machines learn and adapt, they begin to operate independently, often in ways that might not align with human values or intentions. This raises a critical question: How do we ensure that AI systems reflect ethical standards that prioritize human well-being? The challenge lies in programming ethical frameworks into these systems, which is no small feat. The complexity of human morality makes it difficult to distill our values into algorithms that can be universally applied.

Furthermore, the intersection of cybernetics and AI brings to light issues related to privacy and surveillance. With the integration of cybernetic principles in data analysis, we see a surge in the ability of machines to collect, analyze, and interpret vast amounts of personal information. This capability can lead to enhanced decision-making and efficiency, but it also poses significant risks to individual privacy. As we navigate this digital landscape, we must ask ourselves: Are we willing to sacrifice our privacy for the sake of progress?

Another critical area of concern is the potential for bias in AI systems. These systems learn from data, and if that data is skewed or unrepresentative, the outcomes can perpetuate existing inequalities. For example, an AI trained on biased data may make decisions that disadvantage certain groups, leading to a cycle of discrimination. It is essential to recognize that the responsibility lies with us—the developers and users of these technologies—to ensure that our systems are fair and just.

To navigate these ethical waters, a collaborative approach is necessary. Engaging a diverse group of stakeholders—ethicists, technologists, policymakers, and the public—can foster a more comprehensive understanding of the implications of cybernetic technologies. By promoting open dialogue, we can work towards establishing guidelines that govern the development and implementation of AI systems, ensuring they align with societal values.

In summary, the ethical considerations surrounding cybernetics and AI are multifaceted and complex. They challenge us to reflect on our values, question our assumptions, and engage in meaningful conversations about the future we want to create. The path forward is not straightforward, but by prioritizing ethics in our technological advancements, we can harness the power of cybernetics to enhance human life rather than diminish it.

- What is the primary ethical concern regarding AI? The primary concern is the potential for machines to make autonomous decisions that may not align with human values, leading to accountability issues.

- How can we ensure AI systems are fair? By using diverse and representative data sets, engaging various stakeholders in the development process, and continuously monitoring outcomes for bias.

- What role does privacy play in cybernetics? Privacy is a significant concern, as the ability of AI to collect and analyze personal data can lead to surveillance and loss of individual freedoms.

- How can we program ethics into AI? Incorporating ethical frameworks into AI requires collaboration among ethicists, technologists, and policymakers to create guidelines that reflect societal values.

Frequently Asked Questions

- What is cybernetics?

Cybernetics is an interdisciplinary field that studies the structure of regulatory systems. It focuses on how systems communicate and control themselves, whether they are biological organisms or artificial constructs. Think of it as the science of understanding how things work together, like a well-oiled machine!

- Who are the key figures in the development of cybernetics?

One of the most notable pioneers of cybernetics is Norbert Wiener, who laid the groundwork for the field. He explored the concepts of feedback and control, which are central to understanding both natural and artificial systems. Other influential figures include Ross Ashby and Heinz von Foerster, who contributed significantly to the theoretical foundations of cybernetics.

- What are feedback mechanisms in cybernetics?

Feedback mechanisms are processes that allow a system to adjust its behavior based on its output. There are two main types: positive feedback, which amplifies changes, and negative feedback, which stabilizes a system. Imagine a thermostat: it uses negative feedback to maintain a consistent temperature by turning the heat on and off as needed.

- How does self-organization work in systems?

Self-organization is the phenomenon where systems spontaneously develop complex structures without external guidance. This can be seen in nature, such as the formation of patterns in bird flocks or the growth of crystals. It's like watching a dance unfold, where each participant knows their role without a conductor!

- What are the implications of cybernetics for artificial intelligence?

The principles of cybernetics are crucial for developing artificial intelligence, especially in understanding how machines learn and adapt. Cybernetic concepts help create adaptive learning systems that can improve over time, making AI more efficient and effective in various applications, from robotics to data analysis.

- What ethical considerations arise from cybernetics and AI?

As cybernetics intersects with artificial intelligence, several ethical dilemmas emerge, particularly concerning autonomy and decision-making. Questions about how much control we should give machines and the consequences of their learning processes are vital discussions in the field. It's like handing the keys of a car to an AI—how much trust can we place in it?