Philosophy and Its Implications in Robotics

In today’s rapidly evolving technological landscape, the intersection of philosophy and robotics presents a fascinating arena for exploration. Imagine a world where machines not only perform tasks but also make decisions that affect human lives. This scenario raises profound questions: What does it mean for a machine to be "intelligent"? Can robots possess consciousness? And, perhaps most importantly, what ethical frameworks should govern their design and implementation? As we delve into these inquiries, we uncover the intricate web of moral dilemmas and philosophical debates that shape our understanding of robotics and its implications for society.

At the heart of this discussion lies the ethical landscape of robotics. As we create machines that can think and act autonomously, we must grapple with the moral responsibilities that come with such power. Are we prepared to hold robots accountable for their decisions? Should we design robots with the capacity to make ethical choices, or should we retain that responsibility solely for humans? These questions echo the age-old philosophical debates about free will, responsibility, and the nature of good and evil.

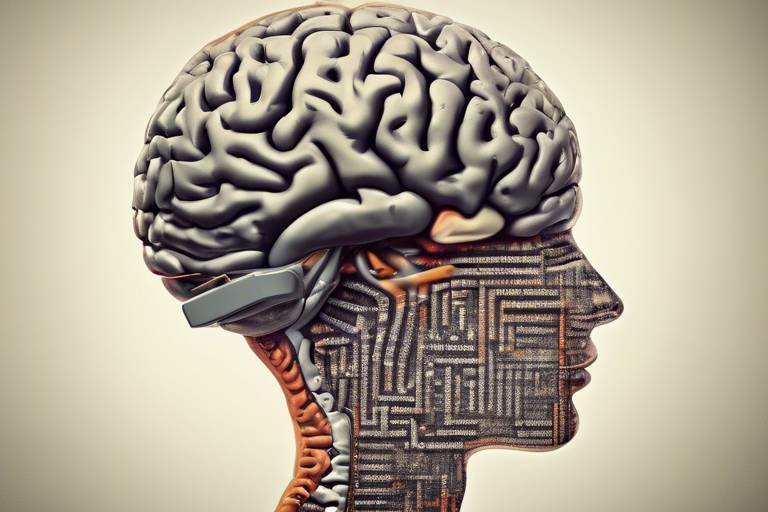

Moreover, the concept of consciousness in artificial intelligence (AI) is a compelling topic that invites rigorous examination. Can a machine, no matter how advanced, truly be aware of its existence? The implications of creating sentient robots extend far beyond technical capabilities; they challenge our understanding of what it means to be human. The Turing Test, a benchmark for assessing machine intelligence, has sparked numerous discussions about the essence of consciousness and the criteria we use to define it. Yet, as we will explore, the Turing Test is not without its limitations.

As we navigate through these philosophical waters, we must also consider the practical aspects of human-robot interaction. Trust plays a pivotal role in how we relate to robots. As these machines become more integrated into our daily lives, the psychological dynamics of our relationships with them grow increasingly complex. Are we becoming too dependent on robots? What happens when we place our trust in machines that lack genuine emotional understanding? These questions underscore the need for a philosophical framework that can guide our interactions with robots while considering the potential emotional ramifications.

Ultimately, the implications of philosophy in robotics are vast and multifaceted. By examining ethical considerations, the nature of consciousness, and the dynamics of human-robot relationships, we begin to understand how these elements intertwine and influence our future. As we stand on the brink of a new era in technology, it is essential to engage in these discussions, ensuring that as we innovate, we do so with a sense of responsibility and foresight.

- What are the main ethical concerns in robotics? Ethical concerns include accountability, decision-making, and the potential for bias in robotic systems.

- Can robots ever be conscious? This remains a debated topic; while robots can simulate certain human-like behaviors, true consciousness is still a philosophical question.

- How does the Turing Test relate to AI? The Turing Test assesses a machine's ability to exhibit intelligent behavior indistinguishable from a human, but it has limitations in measuring true understanding.

- What is the role of trust in human-robot interactions? Trust is crucial as robots become more integrated into our lives; it affects how we rely on them and our emotional responses to their actions.

The Ethical Landscape of Robotics

Ethics plays a crucial role in the development of robotics, acting as the compass that guides engineers, developers, and society at large in navigating the complex terrain of robotic technology. As we march forward into an era where robots are becoming increasingly integrated into our daily lives, the moral dilemmas surrounding their design and deployment are more pressing than ever. Imagine a world where robots not only assist us but also make decisions that could impact our lives. What ethical frameworks should govern these decisions? This is not just a theoretical question; it’s a reality we must confront.

At the heart of the ethical discussion in robotics are several key considerations:

- Accountability: Who is responsible when a robot malfunctions or makes a poor decision? Is it the programmer, the manufacturer, or the user?

- Privacy: As robots collect vast amounts of data, how do we ensure that this information is used ethically and not exploited?

- Safety: How do we design robots that prioritize human safety in their operations, especially in critical sectors like healthcare and transportation?

These considerations lead us to the development of ethical frameworks that aim to address these dilemmas. Various ethical theories can be applied to robotics, including:

- Utilitarianism: This approach focuses on maximizing overall happiness and minimizing harm. In robotics, it raises questions about how to balance benefits against potential risks.

- Deontological Ethics: This theory emphasizes duties and rules. For example, should robots be programmed to follow certain moral rules, even if it might lead to less optimal outcomes?

- Virtue Ethics: This perspective centers on the character and virtues of the individuals involved in robotics. It encourages developers to cultivate ethical virtues in their work.

As we explore these frameworks, the implications of robotic technology become clearer. For instance, consider autonomous vehicles. They must make split-second decisions that could mean the difference between life and death. Should an autonomous car prioritize the safety of its passengers over pedestrians? This dilemma illustrates the ethical quagmire that developers face when programming decision-making algorithms.

Moreover, the integration of robots into various sectors raises significant societal questions. For example, in healthcare, robots assist in surgeries and patient care, but how do we ensure that they enhance rather than replace human empathy and connection? The ethical landscape becomes even more intricate when we consider the potential for bias in AI algorithms, which can perpetuate existing inequalities in society. We must ask ourselves: how do we create a robotic future that is equitable and just?

In conclusion, the ethical landscape of robotics is not merely a set of guidelines to follow; it is a dynamic and evolving field that requires ongoing dialogue and reflection. As we continue to innovate and integrate robots into our lives, we must remain vigilant, ensuring that ethical considerations remain at the forefront of our technological advancements.

- What are the main ethical concerns regarding robotics? The main concerns include accountability, privacy, and safety.

- How can ethical frameworks help in developing robots? They provide guidelines for addressing moral dilemmas and ensuring that robotic systems are designed with human values in mind.

- Why is the ethical landscape of robotics important? It ensures that the integration of robotics into society enhances human life without compromising ethical standards.

Consciousness and Artificial Intelligence

When we dive into the realm of consciousness and artificial intelligence (AI), we find ourselves standing at the crossroads of technology and philosophy. It's a fascinating journey, one that invites us to ponder some of the most profound questions about what it means to be aware. Can a machine, with its circuits and codes, truly grasp the essence of consciousness? Or is it merely a sophisticated mimicry of human thought and emotion? These questions are not just academic; they have real-world implications as we develop AI systems that increasingly resemble human behavior.

To understand these implications, we must first explore the concept of consciousness itself. Consciousness is often described as the state of being aware of and able to think about one's own existence, sensations, thoughts, and surroundings. In humans, this complex phenomenon is deeply intertwined with emotions, experiences, and subjective perceptions. But when we turn our gaze to AI, the picture becomes murky. Can a robot, programmed to respond to stimuli, genuinely experience awareness, or is it simply executing a set of instructions?

One of the most significant philosophical debates surrounding AI consciousness revolves around the idea of sentience. Sentience is the capacity to have feelings and experiences. If we were to create a robot that could simulate emotions convincingly, would that mean it is sentient? Or would it still fall short of true consciousness? This dilemma often leads to discussions about the ethical implications of creating such machines. If we develop robots that can feel pain or joy, what responsibilities do we bear towards them?

As we navigate this intricate landscape, we can't overlook the significance of the Turing Test, proposed by Alan Turing in 1950. The test serves as a benchmark for determining whether a machine can exhibit intelligent behavior indistinguishable from that of a human. However, the Turing Test has sparked heated debates regarding its effectiveness in measuring true consciousness. Critics argue that passing the Turing Test does not necessarily equate to possessing awareness; it merely indicates that a machine can convincingly simulate human-like responses.

Despite its historical importance, the Turing Test has limitations. For instance, a machine could potentially fool a human evaluator without actually understanding or experiencing anything at all. This brings us to the question: what should we be measuring when it comes to AI? Is it enough for machines to mimic human responses, or should we seek a deeper understanding of their internal processes?

Some critics argue that the Turing Test is fundamentally flawed because it focuses solely on external behavior rather than internal consciousness. A machine might be able to respond to questions and engage in conversation, but that doesn't mean it has an inner life or subjective experience. This limitation highlights the need for alternative approaches to assess AI capabilities.

New methodologies are emerging to evaluate AI capabilities and consciousness. Some researchers advocate for tests that measure a machine's ability to exhibit emotional intelligence or engage in self-reflection. For example, can an AI recognize its limitations and adapt its behavior accordingly? These questions push the boundaries of our understanding and challenge us to rethink what it means to be conscious.

In conclusion, the intersection of consciousness and artificial intelligence is a rich field of inquiry that raises more questions than answers. As we continue to develop increasingly sophisticated AI systems, it is crucial to engage with these philosophical debates. Our understanding of consciousness not only shapes the future of technology but also influences our ethical responsibilities towards the machines we create. Are we ready to embrace a future where machines might not only think but also feel?

- Can AI ever truly be conscious? - The debate is ongoing, with many experts believing that while AI can simulate consciousness, it may never achieve true awareness.

- What are the ethical implications of creating sentient robots? - If robots can feel pain or joy, we must consider our responsibilities towards them, including their rights and treatment.

- Is the Turing Test a reliable measure of intelligence? - While it remains a significant benchmark, many argue that it does not adequately assess true consciousness or understanding.

The Turing Test Revisited

The Turing Test, proposed by the brilliant mathematician and computer scientist Alan Turing in 1950, has been a cornerstone in the field of artificial intelligence (AI) for decades. It essentially asks whether a machine can exhibit intelligent behavior indistinguishable from that of a human. Imagine sitting in a room, chatting with both a human and a computer, and you can’t tell which is which. Sounds like a sci-fi movie, right? Yet, this test has sparked countless debates about the very essence of what it means to be intelligent.

As we delve deeper into the implications of the Turing Test, it’s crucial to understand its context. The test was not designed to measure consciousness or self-awareness; rather, it focused on the ability of machines to mimic human responses convincingly. This leads us to some fascinating questions: Can a machine truly understand what it’s saying, or is it merely regurgitating patterns it has learned? In other words, is it really 'thinking' or just 'acting'? This distinction is essential as we navigate the ever-evolving landscape of AI.

Over the years, the Turing Test has been both celebrated and criticized. On one hand, it opened the floodgates for discussions about machine intelligence and its potential. On the other hand, critics argue that passing the Turing Test is not a definitive measure of intelligence. For instance, a chatbot might fool a human into thinking it’s sentient, but does that mean it possesses any real understanding or emotions? This brings us to the heart of the matter: the limitations of the Turing Test.

To illustrate this, consider the following table that highlights some key points regarding the Turing Test:

| Aspect | Description |

|---|---|

| Purpose | To evaluate a machine's ability to exhibit human-like intelligence. |

| Focus | Mimicking human responses rather than understanding or consciousness. |

| Criticism | Does not measure true intelligence or emotional depth. |

| Relevance Today | Still a popular benchmark, but increasingly seen as insufficient. |

As we continue to develop more sophisticated AI systems, the question arises: Is the Turing Test still relevant? Many experts believe it has become somewhat outdated. With advancements in machine learning and natural language processing, we are witnessing AI systems that can engage in conversations that seem remarkably human-like. Yet, this raises another question: Are we mistaking sophisticated algorithms for true intelligence? As we ponder this, we must also consider the potential consequences of our reliance on such tests.

In conclusion, while the Turing Test has played a pivotal role in shaping our understanding of machine intelligence, it’s essential to recognize its limitations. As technology evolves, so too must our methods of evaluating AI. The philosophical implications of creating machines that can mimic human behavior are profound, challenging our perceptions of consciousness, understanding, and what it means to be 'alive.' The journey into the realm of AI is just beginning, and it’s a ride filled with questions that may redefine our very existence.

- What is the Turing Test? The Turing Test is a measure of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

- Who created the Turing Test? The Turing Test was proposed by Alan Turing, a British mathematician and computer scientist.

- Why is the Turing Test controversial? Critics argue that passing the Turing Test does not necessarily indicate true intelligence or consciousness in machines.

- Are there alternatives to the Turing Test? Yes, new methodologies and tests are being developed to assess AI capabilities beyond just conversational mimicry.

Limitations of the Turing Test

The Turing Test, proposed by the brilliant Alan Turing in 1950, has long served as a benchmark for evaluating machine intelligence. However, as we delve deeper into the realms of artificial intelligence and robotics, it becomes increasingly clear that this test, while historically significant, has its limitations. One of the primary critiques is that the Turing Test only measures a machine's ability to mimic human conversation rather than its true understanding or consciousness. Essentially, a machine could pass the test by generating responses that seem human-like without actually possessing any awareness or comprehension of the conversation.

Moreover, the Turing Test is inherently subjective. The evaluation relies on the judgment of a human interrogator, who may be influenced by personal biases or expectations. This subjectivity raises questions about the reliability of the test as a definitive measure of intelligence. What if a machine is exceptionally good at deceiving the evaluator but lacks genuine cognitive abilities? In such cases, the test could erroneously classify a sophisticated chatbot as "intelligent" when, in reality, it is merely a complex set of algorithms executing pre-programmed responses.

Another significant limitation lies in the fact that the Turing Test does not account for the vast spectrum of intelligence. Just as human intelligence manifests in various forms—emotional, analytical, creative—the Turing Test reduces intelligence to a binary outcome: pass or fail. This simplification overlooks the nuanced capabilities that different types of AI may possess. For instance, a robot designed to excel in specific tasks, such as playing chess or diagnosing medical conditions, may not perform well in a conversational setting, yet it can still exhibit a form of intelligence that the Turing Test fails to recognize.

Furthermore, the Turing Test does not address the ethical implications of creating machines that can convincingly simulate human behavior. As robots become more sophisticated, the potential for manipulation and deception increases. If a machine can convincingly mimic human emotions or responses, how do we safeguard against the ethical dilemmas that arise from such interactions? This is particularly concerning in fields like marketing or therapy, where emotional engagement can have profound effects on human behavior.

In light of these limitations, researchers and ethicists are exploring alternative approaches to assess AI capabilities. Some propose tests that focus on understanding and reasoning rather than mere imitation. For instance, evaluating a machine's ability to solve complex problems or demonstrate emotional intelligence could provide a more comprehensive understanding of its capabilities. These new methodologies aim to bridge the gap between human-like behavior and genuine intelligence, ultimately leading to a more nuanced understanding of what it means for a machine to "think" or "feel."

In conclusion, while the Turing Test has played a pivotal role in the history of artificial intelligence, it is crucial to recognize its limitations. As we continue to develop and integrate robotic systems into our lives, understanding the depth and breadth of machine intelligence will be essential. The conversation around AI must evolve beyond simple imitation and embrace the complexities of consciousness, ethics, and the future of human-robot interactions.

- What is the Turing Test? The Turing Test is a measure of a machine's ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.

- Why is the Turing Test considered limited? It only assesses a machine's ability to mimic conversation, not its understanding or consciousness, and is subject to human bias.

- Are there alternatives to the Turing Test? Yes, researchers are developing new tests that focus on understanding, reasoning, and emotional intelligence.

- What are the ethical implications of AI passing the Turing Test? If machines can convincingly simulate human emotions, it raises concerns about manipulation and the authenticity of human-robot interactions.

Alternative Approaches to Assessing AI

As technology continues to evolve at a breakneck pace, the quest to understand and assess artificial intelligence (AI) has become increasingly complex. The traditional benchmarks, like the Turing Test, while historically significant, often fall short of capturing the full spectrum of machine intelligence. This has led researchers and ethicists alike to explore alternative methodologies for evaluating AI capabilities. These new approaches aim to provide a more nuanced understanding of what it means for a machine to "think" or "feel."

One exciting avenue is the development of performance-based assessments. These tests focus on how well an AI system can perform specific tasks, rather than simply mimicking human behavior. For instance, instead of asking a machine to engage in conversation, we might evaluate its ability to solve complex mathematical problems or navigate a physical environment. This shift in focus allows for a more objective measure of intelligence, independent of human-like interaction.

Another promising approach is the use of emotional intelligence assessments. As AI systems increasingly interact with humans, understanding emotional cues becomes vital. Researchers are investigating how well AI can recognize and respond to human emotions, which could be crucial for applications in healthcare, customer service, and companionship. Imagine a robot that not only understands your words but also perceives your mood, adjusting its responses accordingly. Such capabilities could redefine our interactions with machines.

Moreover, some experts advocate for the implementation of multidimensional evaluation frameworks. These frameworks consider various aspects of intelligence, including cognitive, emotional, and social dimensions. By adopting a holistic view, we can better assess the multifaceted nature of AI. For example, a robot designed for therapeutic purposes should be evaluated not just on its conversational skills but also on its ability to build rapport and trust with users.

To further illustrate these alternative approaches, consider the following table that summarizes key methods:

| Assessment Method | Description | Example Application |

|---|---|---|

| Performance-based Assessments | Evaluates task-specific abilities of AI systems. | Robots solving complex math problems. |

| Emotional Intelligence Assessments | Measures AI's ability to recognize and respond to human emotions. | AI companions providing emotional support. |

| Multidimensional Evaluation Frameworks | Considers cognitive, emotional, and social aspects of AI. | Therapeutic robots interacting with patients. |

As we venture into this new territory, it's crucial to remain vigilant about the ethical implications of these assessments. The more we understand about AI, the more responsibility we have to ensure that these technologies are developed and deployed in ways that are beneficial and safe for society. So, as we ponder the future of AI, let's keep asking ourselves: What does it truly mean for a machine to be intelligent? And how can we measure that intelligence in a way that reflects its impact on our lives?

- What is the Turing Test? The Turing Test is a measure of a machine's ability to exhibit intelligent behavior indistinguishable from that of a human.

- Why are alternative assessments needed? Traditional assessments like the Turing Test may not capture the full range of AI capabilities, prompting the need for more comprehensive evaluation methods.

- How can emotional intelligence be measured in AI? Emotional intelligence in AI can be assessed by evaluating its ability to recognize and respond to human emotions effectively.

- What are multidimensional evaluation frameworks? These frameworks assess AI on various dimensions, including cognitive, emotional, and social intelligence, providing a holistic view of its capabilities.

The Role of Autonomy in Robotics

Autonomy in robotics is a fascinating topic that raises numerous questions about decision-making, responsibility, and the very nature of intelligence. As robots become increasingly capable of performing tasks without human intervention, we must grapple with the implications of granting them autonomy. Imagine a world where robots can make choices that affect our lives—this is not just a sci-fi dream; it’s rapidly becoming a reality. But what does it mean for a robot to be autonomous? Is it merely following programmed instructions, or does it involve a deeper level of decision-making akin to human thought?

At its core, autonomy in robotics refers to the ability of a robot to operate independently, making decisions based on its programming and the data it collects from its environment. This independence can manifest in various forms, from simple automated tasks to complex, adaptive behaviors that mimic human-like reasoning. For instance, consider self-driving cars; they analyze traffic patterns, weather conditions, and even the behavior of pedestrians to make real-time decisions. This level of autonomy introduces a new layer of complexity regarding ethical considerations and accountability.

When we think about autonomous robots, we must address the ethical responsibilities that come with this technology. Who is accountable if an autonomous robot makes a mistake? Is it the programmer, the manufacturer, or the user? These questions are crucial, especially when we consider scenarios where autonomous robots might be involved in accidents or errors that lead to harm. The ethical landscape becomes even murkier when we consider robots designed for sensitive tasks, such as healthcare or security. The potential for harm raises the stakes significantly, and we must establish clear guidelines and frameworks to navigate these dilemmas.

Moreover, the concept of autonomy also challenges our understanding of trust. As humans, we tend to trust machines that exhibit a degree of autonomy, believing they can make better decisions than we can. However, this trust can be misplaced. For instance, if a robot is programmed with biased algorithms, its autonomous decisions may perpetuate those biases, leading to unfair outcomes. Therefore, it’s essential to ensure that the programming behind autonomous robots is transparent and ethically sound. This is where interdisciplinary collaboration becomes vital, involving ethicists, engineers, and social scientists to create robots that not only perform tasks but do so in a manner that aligns with our societal values.

As we continue to develop autonomous robots, we must also consider the implications of their decisions on human lives. The relationship between humans and robots is evolving, and with increased autonomy comes increased dependency. We might find ourselves relying on robots for tasks that were once the sole domain of humans, such as caregiving or decision-making in critical situations. This dependency can lead to a shift in societal norms and expectations, prompting us to redefine what it means to be responsible in a world where machines share decision-making power.

In summary, the role of autonomy in robotics is a double-edged sword. On one hand, it offers immense potential for efficiency and innovation; on the other, it brings forth ethical dilemmas and questions of accountability that we cannot ignore. As we stand on the brink of this technological revolution, it’s imperative that we approach the development of autonomous robots with caution, ensuring that we create systems that are not only intelligent but also align with our moral compass.

- What is autonomy in robotics?

Autonomy in robotics refers to the ability of robots to perform tasks and make decisions independently without human intervention. - Why is ethical consideration important in autonomous robots?

Ethical considerations are crucial because autonomous robots can make decisions that impact human lives, raising questions about accountability and responsibility. - How does autonomy affect human-robot relationships?

As robots become more autonomous, humans may develop dependencies on them, which can alter societal norms and expectations regarding responsibility and trust. - What are the potential risks of autonomous robots?

Risks include making biased decisions, causing accidents, and raising ethical dilemmas about accountability in case of errors.

Human-Robot Interaction: A Philosophical Perspective

The relationship between humans and robots is becoming increasingly complex and nuanced. As technology advances, robots are not just tools but companions, assistants, and sometimes even friends. This shift raises profound philosophical questions about the nature of our interactions with these machines. Are we merely programming them, or do they evoke genuine emotional responses from us? The philosophical implications of human-robot interaction challenge our traditional notions of relationships, consciousness, and even morality.

One of the central themes in this discussion is the concept of trust. As robots become more integrated into our daily lives, from household helpers to autonomous vehicles, the need for trust becomes paramount. We rely on these machines to perform tasks that can significantly impact our safety and well-being. But how do we establish trust in a non-human entity? This question is not just technical; it is deeply philosophical. Trust involves a leap of faith, an emotional bond that we typically reserve for other humans. Yet, as robots become more sophisticated, they begin to exhibit behaviors that can mimic human-like responses, leading us to form connections that may not be entirely rational.

Moreover, the dependency on robots raises ethical concerns. As we allow machines to take on more responsibilities, we must consider the implications of this reliance. Dependency can lead to a form of psychological attachment, where individuals may prefer the company of robots over humans. This phenomenon is particularly evident in the rise of social robots designed to provide companionship. While these robots can fulfill emotional needs, they also provoke questions about the authenticity of those connections. Are we forming genuine relationships, or are we simply projecting our emotions onto machines that lack true understanding?

As we delve deeper into the dynamics of human-robot interaction, we encounter the concept of emotional intelligence. Can robots truly understand and respond to human emotions? While current AI can recognize and react to emotional cues, the question remains: do they comprehend these emotions? Philosophers argue that genuine understanding requires consciousness, a quality that machines currently do not possess. This leads to a fascinating debate about the potential for robots to develop emotional intelligence and what that would mean for our interactions with them.

To further illustrate these points, consider the following table that summarizes key aspects of human-robot interaction:

| Aspect | Human Perspective | Robot Perspective |

|---|---|---|

| Trust | Essential for collaboration | Based on programming and reliability |

| Dependency | Can lead to emotional attachment | Operates autonomously based on data |

| Emotional Intelligence | Requires genuine understanding | Simulates responses without true comprehension |

In conclusion, the philosophical perspective on human-robot interaction opens up a realm of questions that challenge our understanding of relationships, trust, and emotional connections. As we navigate this evolving landscape, it is crucial to consider not only the technological advancements but also the ethical and emotional implications of our growing reliance on robots. How we address these challenges will shape the future of our interactions with machines and ultimately define what it means to be human in a world increasingly shared with robots.

- What is the significance of trust in human-robot interactions?

Trust is essential as it underpins our reliance on robots for various tasks, influencing how we integrate them into our daily lives. - Can robots form genuine emotional connections with humans?

While robots can simulate emotional responses, the lack of consciousness raises questions about the authenticity of these connections. - What ethical concerns arise from our dependency on robots?

Dependency on robots can lead to emotional attachments and raise questions about the nature of relationships and the authenticity of human emotions.

Trust and Dependency on Robots

As we plunge deeper into the age of technology, the relationship between humans and robots is evolving at a breathtaking pace. The question arises: how much do we trust these mechanical companions? Unlike the trusty old toaster that merely pops up your bread, today's robots are designed to perform complex tasks, often making decisions that can significantly impact our lives. This growing dependency on robots has sparked a fascinating dialogue about trust—how we build it, how we maintain it, and what happens when it falters.

Trust, in any relationship, is built over time through consistent behavior and reliability. In the context of robotics, this means that the more a robot proves its capabilities—be it in a manufacturing plant, a hospital, or even in our homes—the more we begin to rely on it. For instance, consider a robot designed to assist with elderly care. If it consistently performs its tasks—reminding patients to take their medications, helping them move safely, or even providing companionship—it earns the trust of both the patients and their families. However, what happens when that trust is broken? A malfunction or a failure to perform a critical task can lead to devastating consequences, shaking the very foundation of our reliance on such technology.

Moreover, the psychological aspects of trust in robots are equally compelling. As we integrate these machines into our daily lives, we often find ourselves forming emotional bonds with them. Think about the popular home assistant devices; they respond to our commands, learn our preferences, and even engage in casual conversation. This interaction can create a sense of dependency that goes beyond mere functionality. We start to rely on them for not just tasks, but also for social engagement. This leads to a question: are we at risk of becoming too dependent on robots? Could our reliance on them diminish our own skills and capabilities?

As we navigate this intricate relationship, it’s essential to consider the ethical implications. Should we allow robots to take on more responsibilities, especially in sensitive areas like healthcare or education? The potential for misuse or failure raises concerns that cannot be ignored. To illustrate this point, let’s examine a few scenarios:

| Scenario | Potential Trust Issues |

|---|---|

| Healthcare Robot Malfunction | Patients may receive incorrect medication reminders, leading to health risks. |

| Autonomous Vehicle Accident | Loss of trust in self-driving technology after a serious crash. |

| Social Robot Miscommunication | Misunderstanding emotional cues can lead to feelings of isolation. |

These scenarios highlight the delicate balance between embracing innovation and ensuring safety and reliability. As we become increasingly dependent on robots, it’s crucial to establish clear guidelines and frameworks that govern their use. Ethical programming, transparency in decision-making, and continuous monitoring of robotic systems are just a few measures that can help mitigate risks and reinforce trust.

In conclusion, the journey towards a harmonious relationship with robots is filled with both exciting possibilities and significant challenges. Trust and dependency on robots are not just technical issues; they are deeply human concerns that require thoughtful consideration. As we continue to integrate these machines into our lives, we must remain vigilant, ensuring that our reliance on them enhances our lives without compromising our autonomy or safety.

- How can we ensure the reliability of robots? Regular maintenance, updates, and monitoring can help ensure that robots perform effectively and safely.

- What are the risks of becoming too dependent on robots? Over-reliance can lead to diminished human skills and potential vulnerabilities in critical situations.

- How do emotional bonds with robots affect human behavior? Emotional attachments can influence our interactions with technology, leading to increased trust but also potential issues of dependency.

- Are there ethical guidelines for robot development? Yes, ethical frameworks are being developed to address the implications of robotics in society, focusing on safety, transparency, and accountability.

Social Robots and Human Emotions

As we stand on the brink of a technological revolution, the emergence of social robots is reshaping our understanding of emotional connections. Imagine a world where machines are not just tools, but companions that can engage with us on a deeply emotional level. These robots are designed to interact with humans in ways that evoke feelings of empathy, companionship, and even love. But how does this affect our emotional landscape? Are we ready to embrace robots as emotional beings?

The rise of social robots has sparked a fascinating dialogue about the nature of human emotions and our capacity to form bonds with non-human entities. Research indicates that people often project their feelings onto robots, attributing emotions and intentions to them, even when they are fundamentally programmed machines. This phenomenon, known as anthropomorphism, raises significant questions about the authenticity of these emotional connections. Are we truly forming relationships, or are we merely engaging in a complex dance of programmed responses?

Moreover, the implications of these emotional interactions extend beyond individual relationships. They touch on societal norms and ethical considerations. For instance, consider the following points:

- Emotional Dependency: As we become more reliant on social robots for companionship, what happens to our relationships with other humans? Could these robots replace the need for human interaction?

- Manipulation of Emotions: Is it ethical for robots to engage with us in ways that manipulate our emotions? How do we safeguard against emotional exploitation?

- Therapeutic Applications: Social robots are being used in therapeutic settings, providing support to individuals with emotional and psychological needs. What are the potential benefits and drawbacks of this approach?

The emotional responses elicited by social robots can be profound. For example, studies have shown that individuals interacting with robots designed to simulate empathy can experience reduced feelings of loneliness and increased happiness. This brings us to an important question: Can robots serve as effective companions, or are they merely placeholders for genuine human connection?

As we navigate this uncharted territory, it becomes essential to consider the design and programming of these robots. Developers must prioritize ethical guidelines that ensure these machines are not only capable of simulating emotions but are also designed to foster genuine connections without compromising human dignity. The challenge lies in creating robots that can enhance our emotional well-being without replacing the irreplaceable aspects of human relationships.

In conclusion, the intersection of social robots and human emotions is a complex and evolving field. As we continue to integrate these machines into our lives, we must remain vigilant about the emotional implications of our interactions. Are we ready to embrace a future where robots are not just tools but companions that evoke real feelings? The answer may shape the very fabric of our society.

Q1: Can social robots truly understand human emotions?

While social robots can be programmed to recognize and respond to human emotions, their understanding is limited to algorithms and data. They do not possess genuine feelings or consciousness.

Q2: How do social robots affect human relationships?

Social robots can provide companionship and support, but they may also lead to emotional dependency, potentially impacting human relationships negatively.

Q3: Are there ethical concerns with using social robots in therapy?

Yes, ethical concerns include the potential for emotional manipulation and the risk of replacing human therapists with robots, which may not provide the same level of care and understanding.

Frequently Asked Questions

- What are the main ethical considerations in robotics?

Ethical considerations in robotics revolve around moral dilemmas such as the potential for harm, privacy issues, and the impact of robots on employment. As we integrate robots into society, we must ask ourselves: How do we ensure they are designed to enhance human life rather than detract from it? There are frameworks that guide these discussions, focusing on responsibility and accountability in robotic design and deployment.

- Can robots truly possess consciousness?

The question of whether robots can possess consciousness is a hot topic in philosophy and AI research. While machines can simulate human-like responses and behaviors, the philosophical debate centers on whether this equates to true awareness or just advanced programming. It's a bit like asking if a really good actor is actually experiencing the emotions they portray or simply delivering lines flawlessly.

- What is the Turing Test, and why is it important?

The Turing Test, proposed by Alan Turing, is a measure of a machine's ability to exhibit intelligent behavior indistinguishable from a human. While it remains a significant benchmark for AI, it has sparked debates about its effectiveness in assessing true intelligence or consciousness. Some argue that passing the test doesn't necessarily mean the machine understands or is aware; it could just be mimicking human responses.

- What are the limitations of the Turing Test?

Despite its historical importance, the Turing Test has notable limitations. It primarily focuses on verbal communication, which may not fully capture the essence of consciousness or sentience. Critics point out that a machine could fool a human into thinking it’s intelligent without genuinely understanding or experiencing anything—like a parrot that mimics speech without comprehension.

- What alternative methods exist to assess AI capabilities?

New methodologies are emerging to evaluate AI capabilities beyond the Turing Test. These might include tests that assess emotional intelligence, decision-making processes, and the ability to learn from experiences. Such methods aim to provide deeper insights into machine intelligence, helping us understand not just if a robot can act intelligently, but how it processes information and interacts with its environment.

- How does autonomy affect ethical responsibilities in robotics?

Granting autonomy to robots raises significant ethical questions about decision-making and accountability. If a robot makes a decision that leads to harm, who is responsible? The designer, the user, or the robot itself? This dilemma challenges us to rethink our ethical frameworks and consider how we can ensure that autonomous systems are designed with safety and responsibility in mind.

- What role does trust play in human-robot interaction?

As robots become more integrated into our daily lives, trust becomes a crucial factor. People need to feel confident that robots will act predictably and safely. This psychological aspect of trust can significantly influence how we interact with robots, shaping our dependency on them. It’s like building a friendship; trust is the foundation that allows the relationship to flourish.

- Can social robots affect human emotions?

Absolutely! Social robots are designed to engage with humans on an emotional level, and they can influence our feelings and behaviors. This raises ethical questions about the implications of forming emotional attachments to machines. Are we risking genuine human connections by relying on robots for companionship? These questions challenge us to think critically about the future of human-robot relationships.